Best Practices

Object access

S3 Storage supports 2 ways of URLs to access objects in a Bucket.

| Access style | Example | Use case |

|---|---|---|

| Path-style URL | https://ds12s3.swissscom.com/MYBUCKET/MYOBJECT | The Bucket is set after the URL as suffix. |

| Namespace-style URL | https://MYNAMESPACE.ds12s3ns.swisscom.com/MYBUCKET/MYOBJECT | Only used in rare cases. For static objects like a HTML page, namespace-style can be used to access the Objects. |

| Virtual host-style URL | https://MYBUCKET.ds12s3.swisscom.com/MYOBJECT | The Bucket is part of the URL and set as prefix of the URL. |

ds12s3.swisscom.com vs. ds12s3ns.swisscom.com

- In most use cases ds12s3.swisscom.com is the preferred way to access the Object Storage S3 service.

- ds12s3ns.swisscom.com is normally only used for static webpages, where unauthenticated access is required.

- For a basic example with the S3 Browser application, please follow this link

Private vs. public (internet) access

- Private: Data is only accessible within the customer network via ds12s3.swisscom.com or ds12s3ns.swisscom.com.

- Public: Data is accessible via ds12s3.swisscom.com or ds12s3ns.swisscom.com and from the internet via ds11s3.swisscom.com or ds11s3ns.swisscom.com.

- Be extremely careful by exposing your data to the internet.

- When creating an S3 instance by default the internet accessible flag is not enabled. For security reasons we disabled the possibility to modify this flag after the creation of the S3 instance in either way (not even with a service ticket, as with the chosen design internet accessible S3 service have a different setup).

If the access is set wrong by mistake, you have the following options:

If there is no data:

- delete existing users and S3 service.

- create a new S3 service with the appropriate access.

If there is already data:

- create an additional user.

- delete the existing users (if the credentials are too widely spread)

- create a new S3 service with the appropriate access.

- copy the data via the S3 Tool of your choice to the S3 service

(There is no Swisscom provided copy service available at this point) - delete the data in the old S3 service.

- delete the old S3 user and the old S3 service.

Naming Conventions

Bucket Name

The following rules apply to the naming of S3 buckets in ECS:

- Bucket Name must be between 3 and 63 characters

- Bucket name must contain only alphanumeric lowercase characters and dashes. (follow DNS rules)

The name does not support:

- Starting with a dot (.)

- Containing a double dot (..)

- Ending with a dot (.)

- Name must not be formatted as IPv4 address.

Object Name

The following rules apply to the naming of ECS S3 objects:

- Cannot be null or an empty string

- Length range is 1..255 (Unicode char)

- No validation on characters!

Advanced Features and settings

S3 Features

Supported S3 features can be found here

Archive Safe

Archive Safe is a WORM feature which can be set on a S3 Bucket. This is not AWS standard but a feature from Dell ECS. When a S3 instance with Archive Safe is created, new buckets with any desired retention period can be created by Swisscom into that instance. To achieve that, simply create a ticket to Swisscom stating the S3 Instance (ID or Namespace ID), the desired bucket name and the desired retention period.

With this simple functionality, the S3 backend won't allow objects to be overwritten or deleted for the desired period. To check the remaining retention period of a bucket, it can be found in the header "x-emc-retention-period" from the bucket.

S3 Object Lock

With S3 Object Lock, object can be stored in a WORM (write-once-read-many) model. Objects can be prevented from being overwritten or deleted for a fixed amount of time. All S3 Services can use ObjectLock. Object Lock can either be applied per Object or on Bucket level (global for the whole Bucket). The usage is the same as described for the AWS S3 API.

Automatic Cleanup of expired object versions

As S3 Object Lock will always generate new versions of a file and preserving the versions for a fixed amount of time, it can be a neat feature to create a lifecycle policy which will remove Object Version after the retention period is over. Let's say there is a Bucket-Wide Object Lock Policy which will prevent the deletion of objects for 30 days. Then it can be a good thing to create a policy to cleanup all noncurrent versions after 31 days automatically (But not touch the active versions). This can be achieved with following Retention Policy:

(Please note that also an automatic cleanup policy for incompleted MPUs is included, which we strongly recommend (see below))

{

"Rules": [

{

"ID": "CleanupNonCurrentVersionsAndMPU",

"Status": "Enabled",

"Filter": {

"Prefix": ""

},

"AbortIncompleteMultipartUpload": {

"DaysAfterInitiation": 7

},

"Expiration": {

"ExpiredObjectDeleteMarker": true

},

"NoncurrentVersionExpiration": {

"NoncurrentDays": 31

}

}

]

}

S3 instance vs S3 user

- By Amazon IAM design data is owned by the S3 service (namespace) and not S3 user.

- All S3 users within an S3 instance are able to access the same data. If this is not desired, a new S3 instance needs to be created.

Advanced IAM Rules

To simplify the user experience, only a subset of the IAM possibilities are currently supported. In case of special requirements, please get in touch with us via the normal support process.

ACL on buckets

We do not recommend to protect objects with ACL's on object or bucket level. We recommend to use the predefined user roles of read/write or read-only.

Bucket Policies

There are many ways to restrict access to buckets further. If a concept with many users is planned and restrictions for single users on buckets should be applied, a special notation method must be used. For the policy creation, you need:

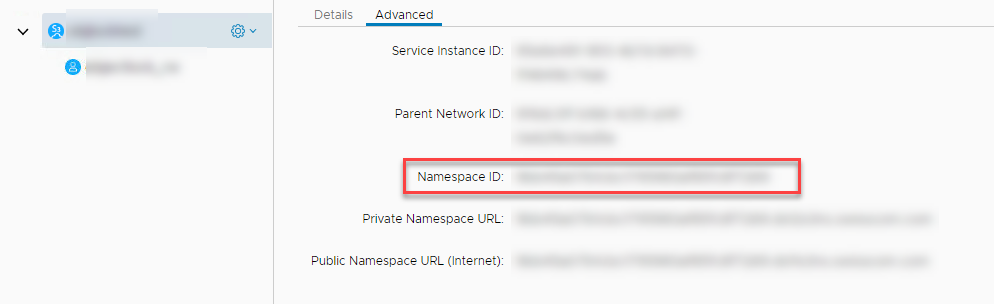

Namespace ID You find the namespace ID in the portal in the advanced tab of your Object Storage S3 Service:

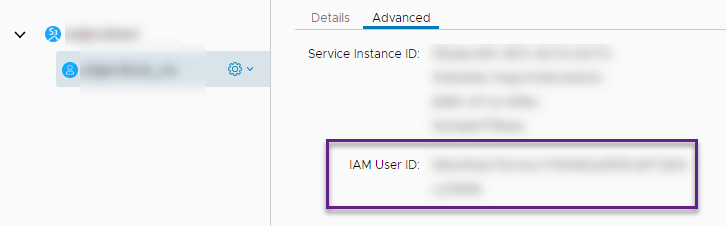

IAM User ID You find the User ID in the portal in the advanced tab of your Object Storage S3 User:

To tailor a more complex bucket policy with a user restriction, following notation is needed:

urn:ecs:iam::Namespace ID:user/IAM User ID

Below a very simple example of such a bucket policy is displayed.

- On [Bucket Name] all access from any other User is blocked ("NotPrincipal")

- On [Bucket Name] only access from the specific user is allowed ("Principal")

{

"Version": "2012-10-17",

"Id": "S3PolicyId",

"Statement": [

{

"Action": [

"s3:*"

],

"Resource": [

"[Bucket Name]/*",

"[Bucket Name]"

],

"Effect": "Deny",

"NotPrincipal": {

"AWS": "urn:ecs:iam::[Namespace ID]:user/[IAM User ID]"

},

"Sid": "denyAllOtherUsers"

},

{

"Action": [

"s3:*"

],

"Resource": [

"[Bucket Name]/*",

"[Bucket Name]"

],

"Effect": "Allow",

"Principal": {

"AWS": "urn:ecs:iam::[Namespace ID]:user/[IAM User ID]"

},

"Sid": "allowUser"

}

]

}

Data encryption

During Transit

Data is always encrypted during transit (HTTPS).

At Rest

Per default, data is not encrypted at rest on your S3 service. With the "Encyption Enabled" option, it is possible to enable encryption for the entire S3 service instance (Namespace-Level).

If Encryption at rest is desired on tenant level for new Object Storage S3 deployments, please open a service request. The option will enforce encryption on new Object Storage S3 instances by default and the user does not have to care of explicitly setting the corresponding parameter input.

Advanced Encryption Possibilities

If you need more fine-granular data encryption (e.g. at bucket level), there are several other possibilities:

- Encryption/decryption on the client for the up/download to the Object Storage S3 service. This gives the highest possible security as the encryption is fully in your hands and no keys are stored within the system.

- Using of server-side-encryption with customer-provided encryption key. The encryption for each request is done at the S3 system with some performance impact. If you lose the encryption key, the data is lost. Neither Swisscom nor the system vendor is able to retrieve the key.

- Using of server-side-encryption with system generated encryption key. This method does only protect your data only from an extremely unlikely event of disk theft. Even if this happens and disks are stolen in a datacenter, it's close to impossible to access your data as the objects are stored in fragments and distributed over many different disks. This doesn't protect the data from access key theft.

Not all of the S3-clients support server-side encryption.

Multipart Upload (MPU)

Nearly all of the tools to access data provide a function to upload large objects as a multipart upload. With this procedure, the file is split in multiple parts and all of them can be uploaded in multiple parallel upload streams. This increases the performance significantly. -> We recommend to use a part size of 8MiB.

Incompleted multipart Uploads

In case a MPU is aborted (for any reasons) there will be an incompleted multipart upload. By default, those incompleted MPUs are not cleaned up by the system. As long as there are incompleted MPUs remaining, a Bucket cannot be deleted. A lifecycle policy can be implemented to automatically cleanup incompleted MPUs after a certain time. Here is the basic policy (will delete all incompleted MPUs after 1 day):

{

"Rules": [

{

"ID": "CleanupIncompletedMPU",

"Status": "Enabled",

"Filter": {

"Prefix": ""

},

"AbortIncompleteMultipartUpload": {

"DaysAfterInitiation": 7

}

}

]

}

Example how to check for incompleted MPUs with MinIO client

$ mc ls <my-alias>/<my-bucket-name> -I

As long as there are incompleted MPUs listed here the bucket cannot be deleted.

Example how to setup automatic MPU cleanup with MinIO client

Add new Policy to a json file (in this example mypolicy.json):

Set Bucket policy:

$ mc ilm import <my-alias>/<my-bucket-name> < mypolicy.json Lifecycle configuration imported successfully to `<my-alias>/<my-bucket-name>`.Check bucket policy:

$ mc ilm ls <my-alias>/<my-bucket-name>ID Prefix Enabled Expiry Date/Days Transition Date/Days Storage-Class Tags CleanupIncompletedMPU ✓ ✗ ✗ (optional) get bucket policy to local json file:

$ mc ilm export <my-alias>/<my-bucket-name> > mypolicy.json(optional) remove bucket policy:

$ mc ilm rm --id "CleanupIncompletedMPU" <my-alias>/<my-bucket-name> Rule ID `CleanupIncompletedMPU` from target <my-alias>/<my-bucket-name> removed.