MPDS Overview

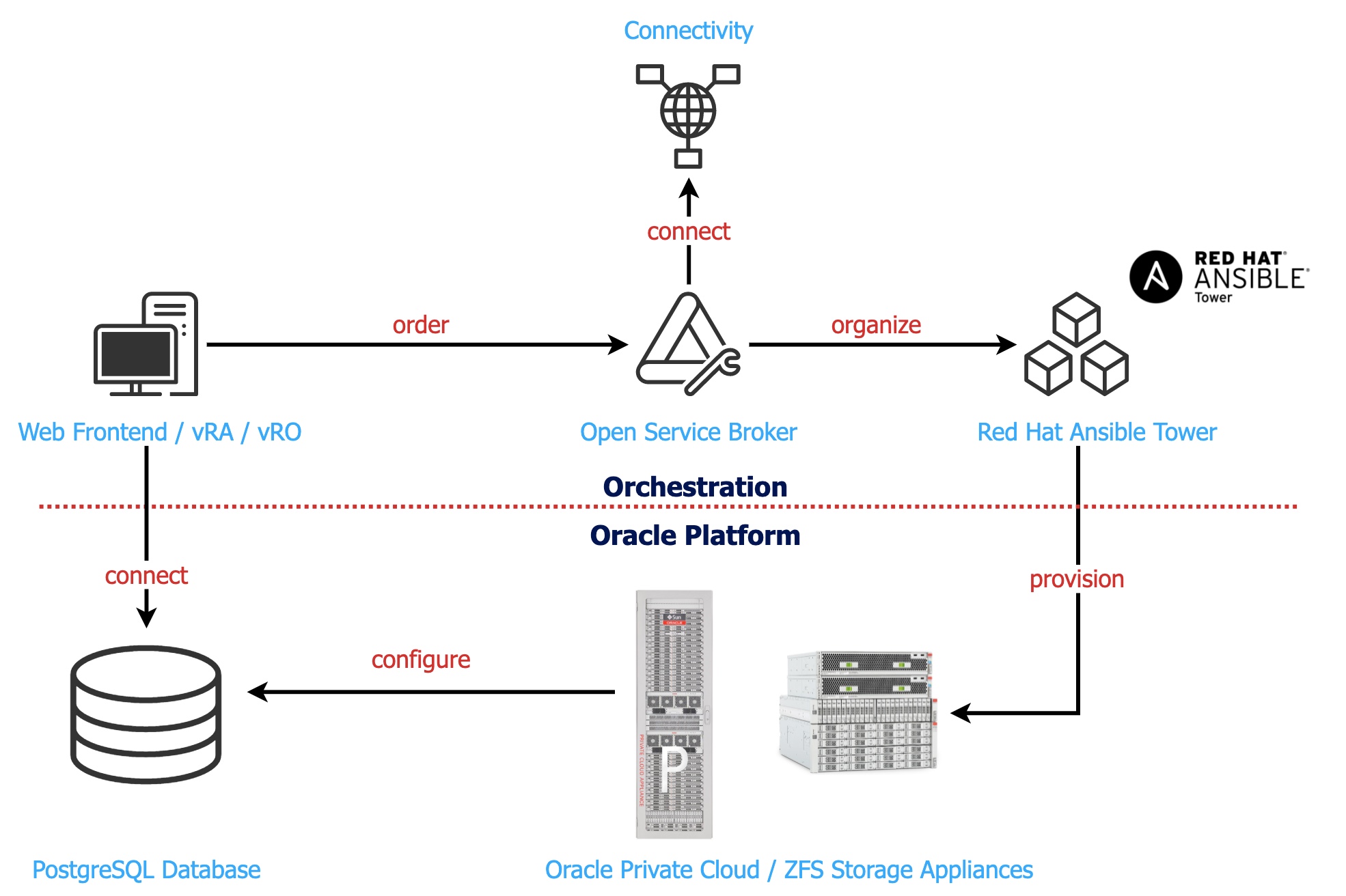

MPDS (Managed PostgreSQL Database Service) is a fully automated managed database service running on Oracle Private Cloud Appliances (PCA) and Oracle ZFSSA storage systems. The PostgreSQL Database Management System runs on top of the virtual Oracle Linux environment (OVM). The following picture shows the MPDS architecture with the orchestration layer and the Platform layer:

MPDS runs on Oracle Private Cloud Appliance engineered systems. The systems are located in geo-redundant Swisscom datacenters.

With MPDS the customer gets administrative access to the PostgreSQL instances on top of a managed PostgreSQL container. The container is managed by swisscom/Trivadis. The customers have no access to the OS, and only very limited read access to the container.

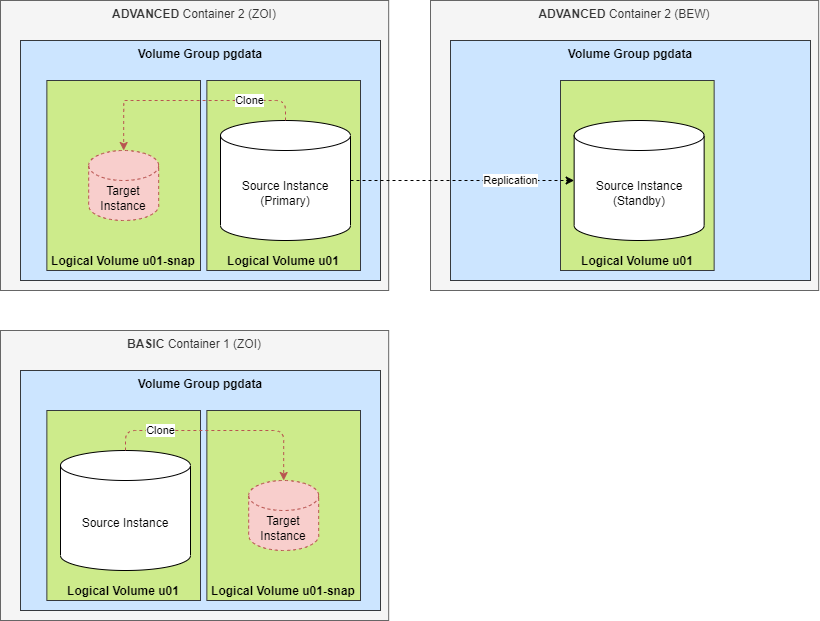

The Managed PostgreSQL Database Service is available with two different plans:

- MPDS BASIC: single instance database - service is deployed in one data center only

- MPDS ADVANCED: primary/standby database configuration - the data is replicated to a second data center in order to provide high data and service availability

PostgreSQL Deployment

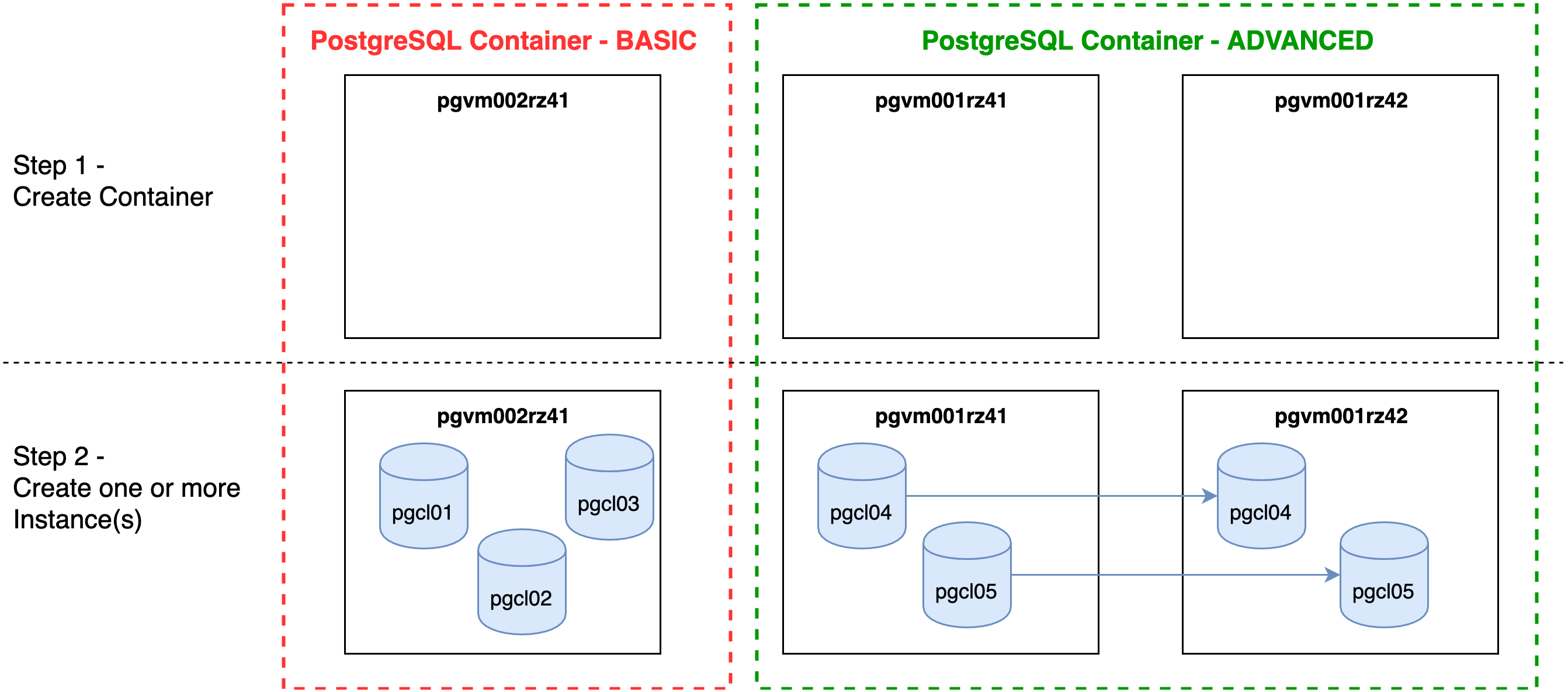

The deployment of a PostgreSQL database instance follows a two-step approach:

- Step 1: create a container (VM)

- Step 2: create one or more database instances (a.k.a. database cluster)

As illustrated in the figure above, the container/instance architecture can be used for database consolidation, and for high-availability setups as well. In order to evaluate the most suitable deployment option, the following points need to be considered:

- Some settings (like plan, or encryption) are common for all PostgreSQL instances within a certain container

- The PostgreSQL instances in a container share the VM specific resources like vCPUs and memory

- The more instances you deploy in one container, the smaller the usable share per instance

- Within MPDS, the number of instances per container is limited to four

- MPDS allocates 8 GB RAM per vCPU (this value cannot be changed)

- The maximum vCPU allocation per container is limited by the physical memory capacity of the compute node (768 GB physical RAM - 64GB host RAM = 704 GB; 704:8 = 88 vCPU)

Behind the scene, a container consists of one VM (BASIC) or two VMs (ADVANCED), and the required storage configuration for the database files. After the deployment the credentials and the connect string per instance will be provided. This way, the customer can connect to every instance and create its own databases, users, tables, etc.

BASIC Plan

With the MPDS BASIC plan a postgreSQL container will be provisioned in one datacenter. MPDS BASIC plan does not provide geo-redundancy like the ADVANCED plan.

ADVANCED Plan

With the ADVANCED plan MPDS supports high availability based on a hot standby setup. The customer can choose between three replication modes:

- ASYNC mode (synchronous_commit = off) is the default and uses asynchronous network transport without automatic failover.

- SYNC_MANUAL mode (synchronous_commit = on) enables synchronous network transport without automatic failover in case the Master instance crashes.

- SYNC_AUTO mode (synchronous_commit = on with automatic failover) enables synchronous network transport between the Master and the Standby database including automatic failover in case the Master instance crashes.

The customer can switch between the replication modes. For further details see Edit Instance.

Replication mode SYNC (synchronous_commit = on) blocks the primary instance in case the standby instance is not available (similar to MaxProtection mode in Oracle Data Guard). However, PostgreSQL allows to change the behavior on the fly in case something happens with the standby. The parameter synchronous_commit can be set dynamically even within the session to LOCAL. This means the commits will not wait for the standby and the application is not affected. For details pls. refer the PostgreSQL Documentation.

Backup and Restore

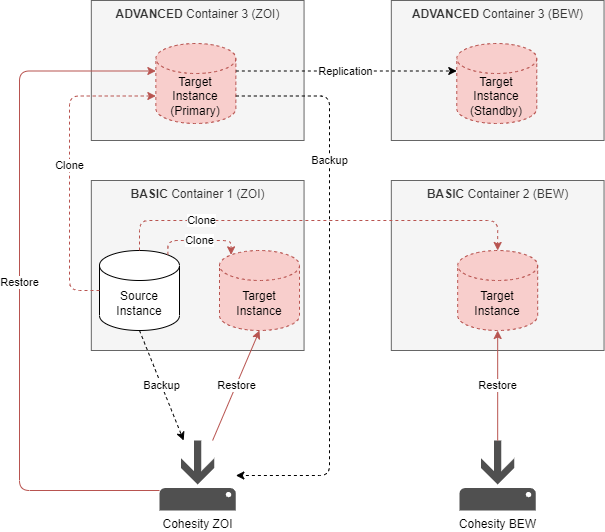

Instance backup can be enabled by choosing the backup checkbox while creating the instance, or as day-2 action. By default, database instance backup is not enabled. If the backup option is selected, an instance backup will be set up with point in time restore capabilities.

- Backup schedule is daily incremental, and weekly full

- Backup compression is always enabled

- Backup encryption is not enabled by default

- Backup retention can be chosen from portal (3, 10 or 30 days; default is 3 days)

- Backup and restore functionality is implemented with pgBackRest.

If backup encryption is selected, the backup files are encrypted. Choosing this option can cause higher CPU load during the backup operation. Disabling/Enabling backup encryption at a later point (edit instance) will make existing backups unaccessible and the retention starts from zero. The operation deletes existing backups and creates a new full backup.

Choosing the backup option causes additional storage costs. The amount of storage used on the backup system is charged in GB.

Backup for ADVANCED setup is the same as for BASIC (local backups on primary instance), but with role-based scheduling approach.

Point-in-time restore functionality is available in portal (see Restore Instance).

Instance Cloning

This feature will be available as of March 11, 2022!

MPDS offers the possibility to clone an existing instance with the same content. The clone resource action "Clone Instance" in the cloud portal can be started from the instance menu. There are two types of clones available:

- RESTORE: An existing instance is cloned based on its backup into the same or another container. The desired timestamp (UTC) is entered directly in the cloud portal. Additionally, the user must choose the target container where the clone should be located.

- SNAPSHOT: An existing instance is cloned based on a storage snapshot into the same container.

RESTORE Clone

Important characteristics of the RESTORE clone:

- The clone instance can be in the same or another container.

- The source instance must have the backup option enabled.

- The timestamp for the clone restore must be within the backup retention period.

- The clone instance has the same major release as the source instance (eg. version 14).

- The clone execution time depends on the instance size and the related the restore duration.

- The cloned instance has the same size as its source.

- If the clone instance is restored into a container with plan ADVANCED, the clone will be replicated (standby) as well.

Note: If the ADVANCED option is in use then the "complete" restore of an advanced instance will take some time. Reason behind that is simple: First the primary side will be restored and then fully synced to the secondary side. The bigger the instance the longer it will take.

SNAPSHOT Clone

Important characteristics of the SNAPSHOT clone:

- The cloned instance is located in the same container.

- The clone is based on a snapshot of the source instance and the data is related to the time when the snapshot was taken.

- The clone instance has the same major release as the source instance.

- The clone execution time does not depend on the instance size because a snapshot is an instant operation that takes only a few seconds.

- The clone instance has a small initial size and grows with the changes in the source and target instance.

- The source instance cannot be deleted until depending snapshots exist.

- The storage auto-extension feature is not available for containers that contain snapshot clones.

- The source instance remains writable when snapshots exist.

- Snapshot clones of snapshot clones are not supported.

- If the snapshot clone instance belongs to a container with plan ADVANCED it is not replicated (no standby). In case of a failover the snapshot instance is lost

Recommendations for SNAPSHOT clones

- Use only one master instance per container and create n snapshots from it.

- Do not change much data in the master while snapshots exist. Delete all depending snapshots first before refreshing data in the master instance. Because of the copy-on-write technology changes in the master consume space within the snapshots.

Monitoring

Event monitoring of container and instances is integral part of the platform operation. Following pgOperate checks are implemented:

Checks BASIC

| Check | Description |

|---|---|

| PG_CHECK_WAL_COUNT | check amount of WAL logfiles, prevents overfilling disk |

| PG_CHECK_DEAD_ROWS | check dead rows, indicates that autovacuum might be not correctly configured |

| PG_CHECK_MAX_CONNECT | check availble connections in Postgres cluster |

| PG_CHECK_LOGFILES | check logfiles for errors |

| PG_CHECK_FSPACE | check available disk space |

Checks ADVANCED

| Check | Description |

|---|---|

| PG_CHECK_WAL_COUNT | check amount of WAL logfiles, prevents overfilling disk |

| PG_CHECK_DEAD_ROWS | check dead rows, indicates that autovacuum might be not correctly configured |

| PG_CHECK_STDBY_STATUS | check status of standby database |

| PG_CHECK_STDBY_TR_DELAY_MB | check transport lag of standby database |

| PG_CHECK_STDBY_AP_DELAY_MB | check apply lag of standby database |

| PG_CHECK_MAX_CONNECT | check available connections in Postgres cluster |

| PG_CHECK_LOGFILES | check logfiles for errors |

| PG_CHECK_FSPACE | check available disk space |

Alerts are not propagated to the customers. Nevertheless, monitoring information can be retrieved with SQL statements from various statistics views.

Security

The MPDS security architecture is based on container and instance isolation measures.

Container Isolation

- Hardening of the container OS according to CIS benchmark DISA STIG for Oracle Linux 7/8

- Container separation by dedicated users, ports, and disks

- Remote connections are restricted to dedicated subnets via pg_hba.conf (ACLs)

Instance Isolation

- PostgreSQL instances are separated by dedicated OS user (no escape to other instance possible)

- SSL encryption

- Instance hardening according to CIS benchmark

- Strong password policy, and enforcement to change the initial password

- Encrypted password

- Dedicated ports

- Encrypted client/server communication

- auth_delay extensions to prevent brut force attacks

Following privileges are granted to user mpds_admin:

- CREATEDB: Ability to create databases in the cluster

- CREATEROLE: Ability to create other roles with the same privilege level or lower. This means the mpds_admin cannot create a role with the SUPERUSER privilege.

- LOGIN: Ability to login to the PostgreSQL database cluster instance

- Role permissions: pg_monitor, pg_read_all_settings, pg_read_all_stats, pg_stat_scan_tables

mpds_admin does not have superuser privileges!

Auditing with pgaudit

Database, object, and statement auditing is part of the security concept.

Database auditing with pgaudit is available with all MPDS deployments. The following statements are audited by default:

- ROLE: Statements related to roles and privileges: GRANT, REVOKE, CREATE/ALTER/DROP ROLE

- DDL: all DDL which is not included in the ROLE clause, e.g CREATE TABLE etc.

- MISC: Miscellaneous commands, e.g. DISCARD, FETCH, CHECKPOINT, VACUUM, SET

- MISC_SET: Miscellaneous SET commands, e.g. SET ROLE.

Object auditing can also be enabled. For that, the predefined role 'mpds_audit' can be used. To enable object auditing for a table simply grant select to the above role:

GRANT SELECT ON test_schema.test_table TO mpds_audit;

Statement auditing: If you need need statement logging, you could achieve this by populating the role/user name you like to enable statement logging for the audit.grant_log_statement_all_to_roles:

INSERT INTO audit.grant_log_statement_all_to_roles VALUES ('app_user', NULL, NULL);

The PostgreSQL parameter log_statement is by default not set to all, because of potential performance overhead. Statement logging will be enabled asynchronously after about 15 minutes. To disable statement logging remove the user name from the table mentioned above:

DELETE FROM audit.grant_log_statement_all_to_roles;

PostgreSQL Versions

MPDS is available with PostgreSQL version 13, and newer.

See PostgreSQL Releases for the list of availabe releases.